Over the past few years, Facebook has copped a range of criticism about its content, from the damaging spread of misinformation to social media-fuelled catastrophes and crimes.

Most recently, one name has been heralded as the changemaker that is causing the company to face its biggest reckoning yet.

Frances Haugen is a former Facebook employee, who was hired back in 2019 to build tools to investigate the potentially malicious targeting of information towards specific communities.

This week, she’s speaking out again. Here’s what you need to know about this modern day heroine.

Why is she famous?

In September, Haugen became a global figure after she released tens of thousands of internal files depicting Facebook’s failure to keep its users safe from harmful content.

The documents she gathered formed the foundation of The Wall Street Journal’s Facebook Files series, published in the same month.

Since then, news outlets from across the world have published stories based on the leaked documents.

What has she done this week?

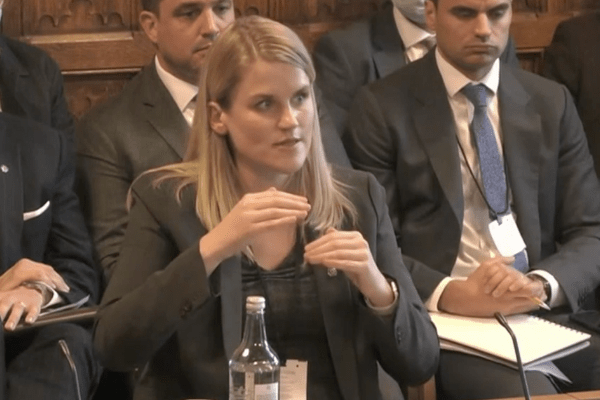

On Monday afternoon, London time, the 37-year old algorithms designer testified in person at the joint committee which aimed to dissect the Online Safety Bill.

What is the Online Safety Bill?

The Online Safety Bill is a piece of legislation that priorities a duty of care on social media companies to protect its users. If they fail to do so, they would face the threat of substantial fines.

The Online Safety Bill will apply to tech firms that allow users to post their own content or interact with each other: these have so far included Facebook, Instagram, Twitter, Snapchat and YouTube.

Google and OnlyFans will also be included.

The committee, which included British MPs and Lords, is hoping to improve a proposed law that places new duties on large social networks and impose them to the checks and balances of Ofcom, the regulatory and competition government authority for media in the U.K.

The bill’s significance was reignited last week after the violent and shocking murder of Conservative MP David Amess, who was stabbed at a church in Leigh-on-Sea in Essex by a 25-year old extremist.

Labour leader Keir Starmer demanded criminal sanctions for leaders of digital platforms who fail to clamp down on extremism.

This prompted Prime Minister Boris Johnson last week to commit to “tough sentences for those who are responsible for allowing this foul content to permeate the internet”.

What did she say at this committee?

On Facebook:

Haugen said Facebook’s own research revealed a concerning issue called “an addict’s narrative”, where young users feel unhappy, and cannot control their use of the platform yet feel incapable to stop using it.

“Facebook has been unwilling to accept even little slivers of profit being sacrificed for safety,” Haugen said.

She also spoke about Facebook’s inability to patrol content in various languages across the world.

“UK English is sufficiently different that I would be unsurprised if the safety systems that they developed primarily for American English were actually under-enforcing in the UK.”

“Those people are also living in the UK, and being fed misinformation that is dangerous, that radicalises people. It is substantially cheaper to run an angry hateful divisive ad than it is to run a compassionate, empathetic ad.”

“The median experience on Facebook is a pretty good experience,” she added.

“The real danger is that 20 percent of the population has a horrible experience or an experience that is dangerous.”

Haugen also spoke about the lack of resources at her former workplace.

“We were told to accept under-resourcing.”

“When I worked on counter-espionage, I saw things where I was concerned about national security, and I had no idea how to escalate those because I didn’t have faith in my chain of command at that point.”

On Instagram

“[It is] more dangerous than other forms of social media.”

“I am deeply worried that it may not be possible to make Instagram safe for a 14-year-old, and I sincerely doubt that it is possible to make it safe for a 10-year-old.”

“The last thing they see at night is someone being cruel to them. The first thing they see in the morning is a hateful statement and that is just so much worse.”

She cited Facebook’s own research which exposed Instagram as more harmful than TikTok and Snapchat since the platform targets “social comparison about bodies, about people’s lifestyles, and that’s what ends up being worse for kids”.

After her appearance, Haugen took to Twitter to reiterate her advice:

“To start, we must demand: 1) Privacy-conscious mandatory transparency from Facebook. 2) A reckoning with the dangers of engagement-based ranking. 3) Non-content-based solutions to these problems — we need tools other than censorship to keep the world safe.”

What is her background?

Born and raised in Iowa, Haugen’s father was a doctor and her mother was an academic career before becoming an Episcopal priest. She told the Wall Street Journal she has always been a rule-follower.

Haugen left Facebook in April, after commencing in June 2019 when a recruiter reached out to her the year before.

She has previously been an employee at Pinterest Inc., Alphabet Inc.’s Google, Hinge, and other tech companies focusing on creating algorithms that set the content which gets through to users.

Watch Haugen’s committee appearance here, on parliamentlive.tv